Virtual Human Architecture

The Institute for Creative Technologies (ICT) Virtual Human Toolkit is based on the ICT Virtual Human Architecture. This architecture defines at an abstract level what modules are needed to realize a virtual human, and how these modules interact. The basic functionality of each module as well as its interface is well-defined, but its actual implementation falls outside the scope of the architecture. The architecture dictates the implementation of a distributed system where communication is mostly realized using message passing. This allows for multiple implementations of a certain module and simple substitution of one implementation for another during runtime. It also allows for distributed systems, where different modules run on separate computers.

ICT has developed a general framework, consisting of libraries, tools and methods that serve to support relatively rapid development of new modules. Using this framework, ICT and its partners have developed a variety of modules, both in the context of basic research as well as more applied projects. The toolkit provides some of the modules that have been transitioned from this research.

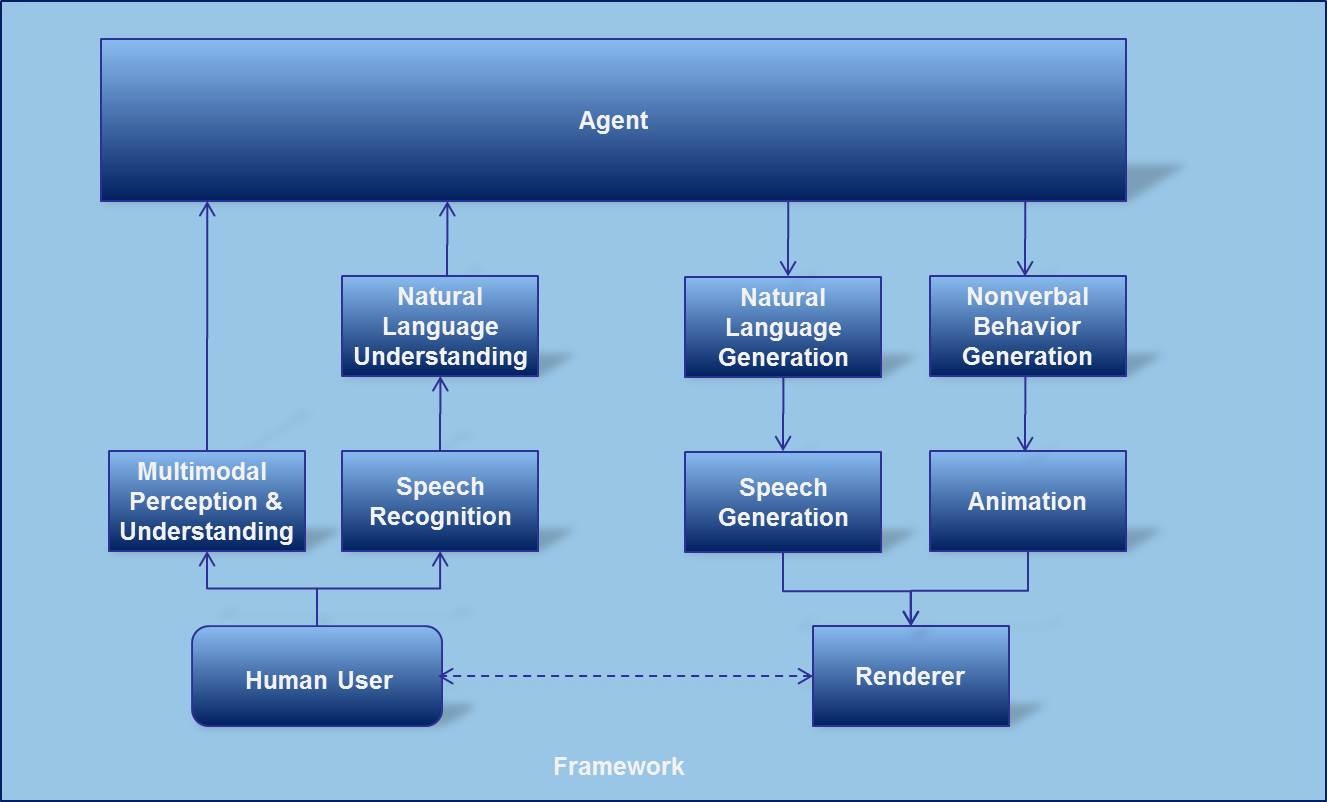

Please see below for a high level Virtual Human Architecture.

Figure 1. The ICT Virtual Human Architecture.

Virtual Human Toolkit Implementation

The Virtual Human Toolkit is a set of components (modules, tools and libraries) that implements one possible version of the Virtual Human Architecture. It consists of the following modules:

- vhtoolkitUnity

- Non-Verbal Behavior Generator

- NPCEditor

- Ogre

- SmartBody

- Speech Client

- Text To Speech Interface

- Watson

- Rapport

- MultiSense

For a complete overview of all the modules, tools and libraries, please see the Components section.

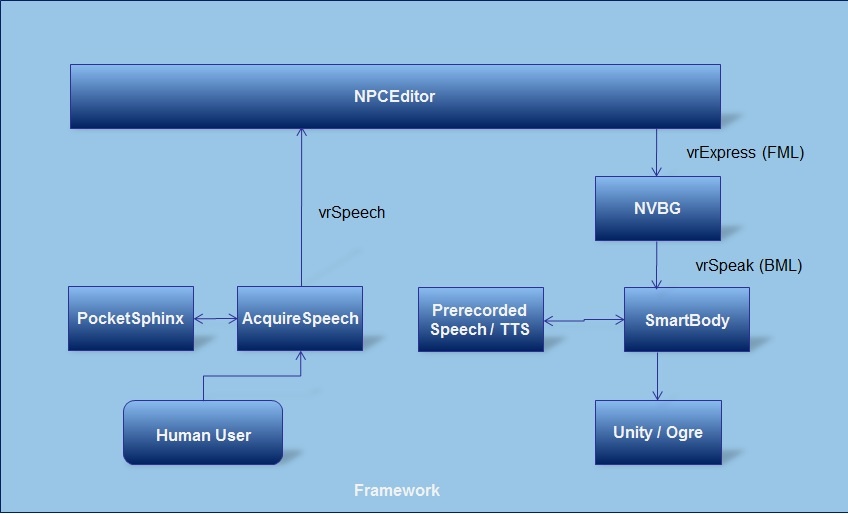

The figure below shows how all modules interact.

Figure 2. The Virtual Human Toolkit architecture.

Virtual Human Messaging

Overview

Communication between most modules happens by message passing, which is implemented in ActiveMQ. Messages are broadcasted through the system and a component can subscribe to certain messages. Developers can use the VH Messaging library to quickly implement receiving and sending ActiveMQ messages. Note that ActiveMQ was preceded by Elvin and that due to legacy reasons one might still encounter mentionings of Elvin, even though no Elvin code is used anymore.

Every module has its own messaging API. In addition, each module should at least implement the basic messaging protocol that allows modules to request and share whether they are online:

- vrAllCall, pings all modules.

- vrComponent component-id sub, response by module to 'vrAllCall'; should also be send on start-up by module.

- vrKillComponent {component-id,'all'}, requests a single module or all modules to shut down.

- vrProcEnd component-id sub, send by module on exit.

Below follows a detailed description of each message. Note that this section is currently incomplete.

RemoteSpeechCmd

RemoteSpeechCmd

Description

Sent by SmartBody to Text-To-Speech relay. Requests for a certain text and voice to create speech.

RemoteSpeechReply

RemoteSpeechReply

Description

Reply from Text-To-Speech relay (to SmartBody) with viseme and word timing information.

vrAllCall

vrAllCall string

Description

The message vrAllCall is a ping-style request for all components to reannounce their availability, often used when a new component needs to identify available services. This is used in particular by the launcher to see which components are online. Developers can use an optional parameter to indicate why the request was send out.

The components that are available will announce their availability with the vrComponent message.

Parameters

No parameters are used.

Examples

- vrAllCall

Components that send message

All components can send this message. The launcher sends this message on a regular interval in order to keep track of the status of all modules and tools that are known to have been launched.

Components that receive message

All components except libraries should implement listening to this message and send a vrComponent message on receiving it.

Related messages

vrComponent

vrComponent component-id sub

Description

The vrComponent message announces the availability of the given module to all other components. This message should be send at start-up and in response to vrAllCall.

Parameters

- component-id, contains the ID of the particular component. This can be a module type, like 'renderer' or 'nlu', or a specific module, like 'npceditor'.

- sub, is required, but not strictly defined. One can use it to specify the actual implementation of the module type, for instance 'ogre' for the renderer, to specify a subcomponent, for instance 'parser' for the Non-Verbal Behavior Generator, or simply use 'all' when no additional information is useful to provide.

Examples

- vrComponent renderer ogre

- vrComponent nvb generator

- vrComponent launcher all

Components that send message

All components except libraries should send this message on start-up. In addition, each component except libraries should send it in response to vrAllCall.

Components that receive message

All components except libraries should implement listening to this message.

Related messages

vrExpress

- vrExpress char-id addressee-id utterance-id xml-messsage

Description

Sent from the NPCEditor (or any other module requesting behavior) to the Nonverbal Behavior Generator. See the logs when running Brad for examples. This message will be better documented in future iterations.

vrKillComponent

vrKillComponent component-id

vrKillComponent all

Description

Requests either a specific or all modules to shut themselves down. Each module and tool should listen to this message and when the parameter matches its component ID or 'all', it should shut itself down, after sending vrProcEnd. Note that, unlike vrComponent, no second parameter is present, therefore all submodules should exit when receiving the kill request.

Parameters

- component-id, contains the ID of the particular component. This can be a module type, like 'renderer' or 'nlu', or a specific module, like 'npceditor'.

Examples

- vrKillComponent renderer

- vrKillComponent nvb

- vrKillComponent all

Components that send message

This message will usually only be send by the launcher.

Components that receive message

All components except libraries should implement listening to this message.

Related messages

vrProcEnd

vrProcEnd component-id sub

Description

This message is send by a component, indicating to the rest of the system that it has exited and the service is no longer available. This message should be send by all components, except libraries.

Parameters

- component-id, contains the ID of the particular component. This can be a module type, like 'renderer' or 'nlu', or a specific module, like 'npceditor'.

- sub, is required, but not strictly defined. One can use it to specify the actual implementation of the module type, for instance 'ogre' for the renderer, to specify a subcomponent, for instance 'parser' for the Non-Verbal Behavior Generator, or simply use 'all' when no additional information is useful to provide.

Examples

- vrProcEnd renderer ogre

- vrProcEnd nvb generator

Components that send message

All components except libraries should send this message on exit.

Components that receive message

Modules that need to be aware of available services should listen to this message.

Related messages

vrSpeak

- vrSpeak char-id addressee-id utterance-id xml-messsage

Description

Sent from the Nonverbal Behavior Generator to SmartBody. Requests for SmartBody to realize verbal and nonverbal behavior. See the logs when running Brad for examples. This message will be better documented in future iterations.

vrSpeech

- vrSpeech type turn-id other

Description

vrSpeech messages go together as a sequence with the same 'turn-id', representing the speech recognition, timing, and possible segmentation of a human utterance. 'vrSpeech start' means the person has started to speak - indicated currently by pressing on a mouse button. 'vrSpeech finished-speaking' means the person has stopped speaking for this turn, indicated currently by releasing the mouse button. 'vrSpeech interp' indicates the text that the person said during this speech. 'vrSpeech asr-complete' means that speech recognition and segmentation are finished for this turn, and other modules are free to start using the results without expecting more utterances for this turn. 'vrSpeech partial' is an optional message, giving partial results during speech.

- vrSpeech start $turn_id $speaker

- vrSpeech finished-speaking $turn_id

- vrSpeech partial $turn_id $utt_id $confidence $tone $speech

- vrSpeech interp $turn_id $utt_id $confidence $tone $speech

- vrSpeech asr-complete $turn_id

Parameters

- 'type', indicates the type of vrSpeech message, being 'start', 'finished-speaking', 'partial', 'interp', and 'asr-complete'.

- 'turn_id is a unique identifier for this whole turn. Must be the same across all messages in a sequence.

- 'speaker' is the speaker of this message, like 'user' in the Brad example.

- 'utt_id', the unique identifier for this utterances. A turn can be segmented into multiple utterances. Historically this is always '1', and there is only one 'vrSpeech interp' message per turn. The current convention is that the speech recognizer / client will send out a message with '$utt_id 0', and then a possible segmenter will send out one or more messages from 1 to n, labelled in order. A segmenter is currently not part of the toolkit. For 'vrSpeech partial' $utt_id is an incremental interpretation of the part of the utterances that has been processed so far. It will likely have a value of p followed by an integer but any unique identifier should be ok.

- 'confidence' - the confidence value for this interpretation. Should be between 0.0 and 1.0. Historically this is always 1.0.

- 'tone' - the prosody - should be "high" "normal" or "low" - historically, this is always "normal".

- 'speech' - the text of the (segment) interpretation. For 'utt_id 0' this will be the text of the full turn, for other segments this will be the text only for that segment.

Examples

This example indicates a speaker with the name 'user' starting to speak, saying 'hello how are you', and then stopping. Note that the speech is only analyzed / completed after the user has stopped speaking. Also note that all listed messages are needed to process a single utterance and notify the rest of the system of the results.

- vrSpeech start test1 user

- vrSpeech finished-speaking test1 user

- vrSpeech interp test1 1 1.0 normal hello how are you

- vrSpeech asr-complete test1

Components that send message

The speech client or speech recognizer.

Components that receive message

Modules that want to know about speech timing information or spoken text, usually a Natural Language Understanding Module or the NPCEditor.